DECAF : MEG-based Multimodal Database for Decoding Affective Physiological Responses.

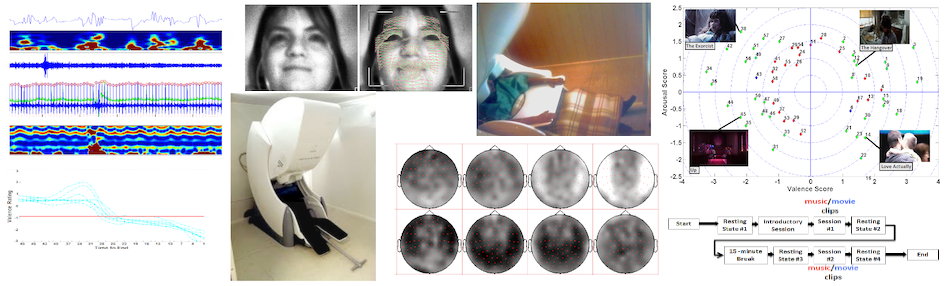

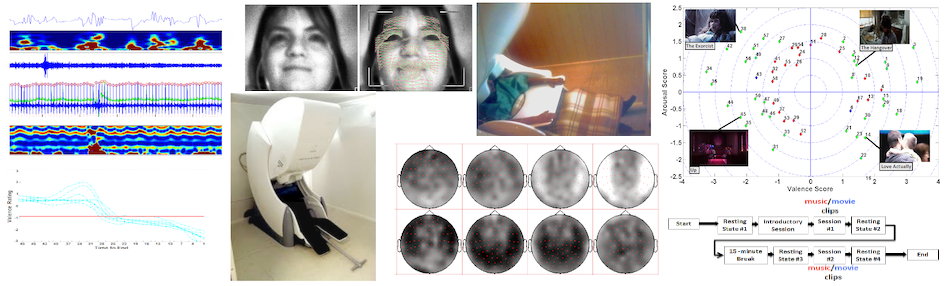

In this work, we present DECAF–a multimodal dataset for decoding user physiological responses to affective multimedia content. Different from datasets such as DEAP and MAHNOB-HCI, DECAF contains (1) Brain signals acquired using the Magnetoencephalogram (MEG) sensor, which requires little physical contact with the user’s scalp and consequently facilitates naturalistic affective response, and (2) Explicit and implicit emotional responses of 30 participants to 40 one-minute music video segments used in DEAP and 36 movie clips, thereby enabling comparisons between the EEG vs MEG modalities as well as movie vs music stimuli for affect recognition. In addition to MEG data, DECAF comprises synchronously recorded near-infra-red (NIR) facial videos, horizontal Electrooculogram (hEOG), Electrocardiogram (ECG), and trapezius-Electromyogram (tEMG) peripheral physiological responses. To demonstrate DECAF’s utility, we present (i) a detailed analysis of the correlations between participants’ self-assessments and their physiological responses and (ii) single-trial classification results for valence, arousal and dominance, with performance evaluation against existing datasets. DECAF also contains time continuous emotion annotations for movie clips from seven users, which we use to demonstrate dynamic emotion prediction.

To get access to the paper, please click here!

To get access to the data, please visit the following pages: